Fluorescence Imaging

Here is a quick description of a fluorescence imaging system that was developed to reduce the harmful effects of excitation light during experiments on live cells. The basic idea is to split frames off a streaming video signal, coordinated with strobed LEDs, and reassemble the images into multiple video windows, effectively streaming video from multiple light sources “simultaneously” with a single camera. In normal operation, say, every 10th frame will be used for fluorescence, while the rest are used for normal visualisation, reducing the time of exposure to the excitation light by 90%. Additionally, some primitive signal amplification can be performed prior to display, allowing a reduction to the excitation light intensity, further minimising cell damage due to fluorescence.

Micromanager Plugin

The LEDs and some basic video parameters are controlled by an ImageJ plugin that works with Micromanager. Currently, up to three independent light sources can be used, each streaming to a dedicated video window. Additionally, any two of these can be merged and displayed in a fourth video window, with one set of images as the “foreground” (coloured green) and the other as the “background” (in grey scale). This merge is intended to highlight fluorescent objects.

The plugin works by simply setting the camera's register values through properties made available by a modified Micromanager dc1394 adapter. Using this adapter causes the registers to appear as properties, so their values can be set using the Micromanager device property browser. Here is a screenshot showing how they appear:

So the Java GUI really just provides an intuitive way to compute and set these values.

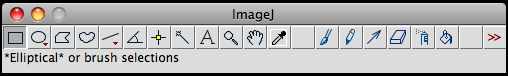

To start the program, first launch Micromanager (which is also an ImageJ plugin), then select the main ImageJ window:

With this as the active window, you can find the StrobeLight plugin at

Plugins>StrobePlugin>StrobeLight

Note: If the Micromanager GUI is the active window, you will see a “Plugins” tab in the menu bar, but it will not give you access to the StrobeLight plugin.

Once launched, the StrobeLight GUI should be visible. It will look like the following, but with default values set:

The above shows the program in a pretty useless state, but it illustrates everything that you can use it for. The window is divided into four panels and an information bar for intuitive use.

Strobe Pattern Control

This is where you set the pattern of pulses for up to three independent LEDs. In this example, the first 7 elements are configured in a “normal” working mode (a blue flash followed by 6 red flashes). The eighth element gives a dark frame, and the next five show how combinations of colours appear. That the last three appear as the background colour shows that the strobe pattern is truncated at thirteen elements, rather than using the maximum of sixteen. The check boxes “Chanel X” allow you to enable/disable the LED triggers individually. The “View Live” button triggers the start of video acquisition (and toggles to “Stop”), and the “Refresh” button updates all the values in the GUI.

I/O Pin Configuration

This panel allows you to configure each of the I/O pins on the camera as input or output. By default pin0 is set for input (with an external trigger in mind) and the others are set for output. It is not expected that these will be changed often.

General Settings

This panel gives access to some of the camera properties made available by the dc1394 adapter.

- The “Frame Rate” drop-down list lets you select from the camera's allowed values.

- The “Embed Frame Information” check box allows you to enable/disable all strobe patterns at once. Coordinating the images with the light flashes requires overwriting the first few pixels in every image with information about its position in the strobe cycle.

- The “Foreground Channel” button group allows you to select which stream is to be used as the foreground of the merged images.

- The “Background Channel” button group allows you to select which stream is to be used as the background of the merged images.

- The “Foreground Gain” slider allows you to amplify the foreground signal digitally. Currently it simply multiplies all pixel values by the gain value, displayed in the information bar at the bottom of the window.

Register Values

With the exception of “Strobe Pattern Length”, this panel is mostly for information. Formally, this plugin works by getting/setting the camera's register values through the modified dc1394 adapter. The intuitive binary string 011111001001011000 used to establish the strobe pattern for channel one in the above image must be passed to the adapter as its decimal representation, 32344. Obviously, converting between these bases automatically is one of the advantages of using the GUI, though the properties can all be set (using the decimal forms) in the Micromanager properties browser.

Information Bar

When it is launched, the plugin checks to see which version of Micromanager is running. If it can't find one, it gives you an error message. If it does, the GUI is initialised, and the running Micromanager version is displayed in the information bar. Additionally, the information bar displays the frame number and current foreground gain.

Clocks and Video Signals

We are using dc1394 (firewire) cameras for our imaging. There is a well developed set of instructions that are used by programs to interact with the devices (we use the libdc1394 API to access these). Each camera uses only a subset of the available options, and it's worth giving a quick description of the cameras we're using, called grasshopper, by Point Grey Imaging. In addition to its video capabilities, this camera has four programmable digital I/O lines that we can use to synchronise the video acquisition with LED light pulses in order to fit with our application.

When the camera powers up, it immediately starts streaming video data using a circular (or ring) buffer. Unless it receives a request to do something with the data, the camera soon overwrites them with new images in order to minimise the size of the buffer required. If the camera receives a signal, say a “snapImage” from software, it transfers the video data (in this case, a frame) and frees the buffer again. Obviously, sending requests for images repeatedly, and reassembling them this way is one (cumbersome) way to generate a video stream.

By setting up the I/O pin behaviours, we get a series of pulses that depend on the camera's position in its 16-frame counter. These I/O lines are used to trigger LED drivers. For any given frame, the channel sequences dictate which LEDs are on and which are off. Additionally, the frame number is embedded in the corresponding image, and used to direct it to the appropriate video window.